Preface :

Data compression is a software technology that takes information and represents it in compact form. Compression algorithms create these compact representations by detecting patterns in the data and then representing them by using less information. The data can consist of a simple textfile or a binary word processing file. More complex examples include sound and audio files. Most computer users have applied a compression program to save disk space.

There are two types of data compression. With lossless compression, the data compression loses no information. The original data can be recovered exactly from the compressed data. We normally apply this type of compression to "discrete" data, such as text, word processing files, computer applications, and so forth. A lossy compression technique loses some information during compression and the data cannot be recovered exactly. However, this type of compression tends to shrink the data further than lossless compression techniques. Sound files often use this type of compression.

Evaluation of a compression algorithm can include a mathematical complexity analysis that determines its computing requirements. Other criteria assess how much comparison the algorithm produces. When evaluating a lossy compression technique, another criterion is how closely the decompressed image resembles the original. Often, a mathematical analysis is very difficult to perform, so we use the latter criteria. The compression ration is the ratio of the number of bits in the original data to the number of bits in the compressed image. For instance, if a data file contains 500,000 bytes and the compressed data contains 100,000 bytes, the compression ratio is 5:1.

In this section, we will consider a compression technique, known as Huffman compression, that relies on counting the number of occurrences of each 8-bit byte in the data and generating a sequence of optimal binary codes called prefix codes. Algorithms that perform optimization normally execute a series of steps requiring choices. At each step, a greedy algorithm always looks at the data on hand and makes the choice that looks best on the basic of the local data. It hopes that the locally optimal choices will lead to an optimal solution to the whole problem. The Huffman algorithm is an example of a greedy algorithm.

Huffman compression is a popular and effective technique for data compression. The technique tends to very effective for textfiles,in which compression ratios of at least 1.8 (at least 45% reduction) are common. The technique is successful for binary files, but the savings are generally not as good. The algorithm generates a table that contains the frequency of occurrence of each byte in the file. Using these frequencies, the algorithm assigns each byte a string of bits known as its bit code and writes the bit code to the compressed image in place of the original byte. It is hoped that the sum total of the bit codes is smaller than the original file.

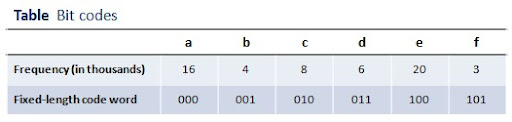

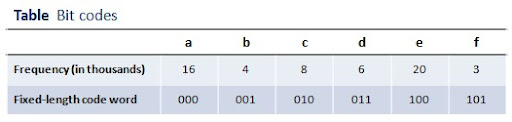

Suppose that a files contains only the ASCII (8-bits) characther 'a' through 'f' and that the characters have the frequencies specified in below table. For example, the character 'c' occurs 20,000 times in the file. If we assign each character a fixed-length bit code, we will need 3 bits for each character ('a'=000, 'b'=001, ..., 'f'=101), as shown in below table. If we replace each character by its bit code, the resulting compressed image use a total of :

(16(3) + 4(3) + 8(3) + 6(3) + 20(3) + 3(3)) x 1000 = 171,000 bits

Computers store data in 8-bit bytes, so the image will have a size of 21,375 bytes. The original file has a sizeof 57,000 bytes, so the compression ratio is 2.67 (Saving of 62.5%)

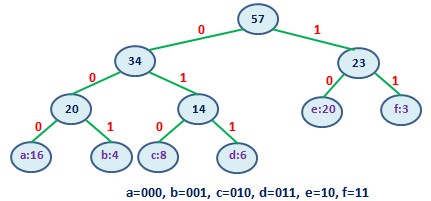

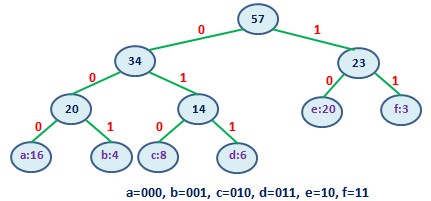

The goal of the Huffman algorithm is to create an "optimal" binary tree that represents the bit codes. We will explain the meaning of optimal when we develop the algorithm. For now, let us explore a tree that illustrates the issues. In the example, each leaf node contains a byte and its frequency of occurrence. Each internal node contains the sum of the frequencies of its children. Starting at the root, a left child designates a bit of 0 and a right child designates a bit of 1. The tree grows until the branches create all bit codes as leaf nodes. The tree structure for the bit codes in upper table is as follows :

Note that, in the tree, no bit code is also a prefix for another bit code. This is guaranteed, because a byte occurs only in a leaf node. Such codes are calledprefix codes. In a tree, each non-leaf node contains a frequency count. This becomes part of our strategy to create a optimal tree. Another issue of optimality involves the right subtree of the root. There are two codes beginning with 10 but no codes beginning with 11. The reason is that the tree is not a full binary tree. A full binary tree is one in which interior node has two children. By converting the tree to a full tree, we can generate better bit codes for 'a'-'f'. If we replace the 23 on level by its subtree, the following full tree result :

The bit codes for 'e' and 'f' are both 1 bit shorter, so the compressed file will now have :

(16(3) + 4(3) + 8(3) + 6(3) + 20(2) + 3(2)) x 1000 = 148,000 bits

which corresponds to a compression ratio of 3:1.

Now that we have seen a tree of bit codes, we can begin to understand the design of a file-compression algorithm. Begin by reading the file and determining the frequencies of the bytes in the files. Then use the frequencies to build a tree of prefix codes that determines unique bit codes for each byte. Write the tree to the compressed file and then reread the source file. For each byte in the second pass, write its corresponding bit code to the compressed file.

To decompress a file, decode the stream of bits by using the prefix codes. To determine the first byte of the original file, start at the root of the tree and read a bit from the compressed file. If the bit is 0, move to the left child; if the bit is 1, move to the right. Read the next bit and move again. Continue until you encounter a leaf node, so there is no ambiguity. Continue in this fashion until reaching the end of the compressed file.

The overriding issue in using bit codes for compression is to choose an optimal tree. It can be shown that the optimal bit codes for a file are always represented by a full tree. Any full tree with n leaf nodes has exactly n-1 internal nodes, and so it has a total of n+(n-1)=2n-1 nodes. Thus, if a file contains nunique bytes, and we compress it by replacing its bytes by bit codes, the corresponding tree will have 2n-1 nodes. There is a simple formula for the number of bits in the compressed image. For each byte b in the original file, let f(b) be the frequency of the byte and d(b) be the depth of the leaf node containing b. The depth of the node is also the number of bits in the bit code for b. We refer to the number of bits necessary to compress the file as the cost of the tree, which we specify by the following relation :

It can also be shown that a Huffman tree generates optimal prefix codes. That is, among all tree representing prefix codes, a Huffman tree gives the minimum cost. The Huffman algorithm constructs the tree so that the most frequently occurring bytes correspond to leaf nodes near the top of the tree, and the least frequently occurring bytes occur at the bottom. In this way, frequently occurring bytes have short bit codes and less frequently occurring bytes have long bit codes. As we describe the algorithm, we illustrate each step with the data in previous table.

Building a Huffman Tree :

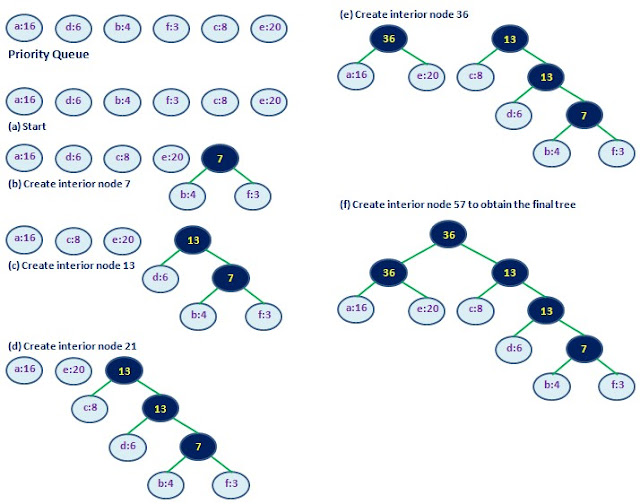

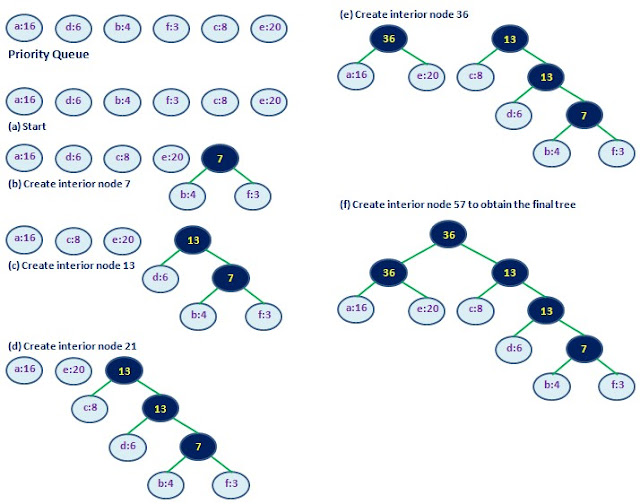

For each of the n bytes in a file, assign the byte and its frequency to a tree node, and insert the node into a minimum priority queue ordered by frequency.

Remove two elements, x and y, from the priority queue, and attach them as children of a node whose frequency is the sum of the frequencies of its children. It does not matter which node is the left child and which node is the right node. The byte value in an interior node is not used. Insert the resulting node into the priority queue.

In a loop, perform this action n-1 times. Each loop iteration creates one of the n-1 internal nodes of the tree. Because each internal node has two children, the resulting tree is full. Below figure shows each step in the construction of a Huffman tree for the sample file :

For the Huffman tree, the compressed file contains :

(16(2) + 4(4) + 8(2) + 6(3) + 20(2) + 3(4)) * 1000 = 134,000 bits

which corresponds to a compression ratio of 3.4.

Building a Huffman tree for file compression is simple in principle. However, its implementation involves a great many details. The implementation uses a variety of tools we have developed, including the HeapPQueue class based on the heap, the bit array, and inheritance. Because the compression algorithm writes binary data to disk and the decompression algorithm reads binary data from disk, we use binary files.

Implementing Huffman Compression :

The HCompress class is designed for use in compressing a file with a file name as an argument. It opens the source file and creates a binary output file by adding the extension .huf to the name. The compression process includes accompanying process messages that are output to console. To use the same HCompress object for additional files, call setFile() with a new source file name. The public method compress() executes the compression steps. To understand some of the internal parameters of the compression process, we provide the methods compressionRatio() and size(). The latter gives the number of nodes in the Huffman tree.

To simply all process and demonstration, we made a static method generateData() to generate fake test data. Afterward, we can use the fake data to be used in the method compress() for demonstration. Also we store the data in HNode class which will be used for generate the binary tree to calculate the bit codes. The implementation is as below :

Output :

Data compression is a software technology that takes information and represents it in compact form. Compression algorithms create these compact representations by detecting patterns in the data and then representing them by using less information. The data can consist of a simple textfile or a binary word processing file. More complex examples include sound and audio files. Most computer users have applied a compression program to save disk space.

There are two types of data compression. With lossless compression, the data compression loses no information. The original data can be recovered exactly from the compressed data. We normally apply this type of compression to "discrete" data, such as text, word processing files, computer applications, and so forth. A lossy compression technique loses some information during compression and the data cannot be recovered exactly. However, this type of compression tends to shrink the data further than lossless compression techniques. Sound files often use this type of compression.

Evaluation of a compression algorithm can include a mathematical complexity analysis that determines its computing requirements. Other criteria assess how much comparison the algorithm produces. When evaluating a lossy compression technique, another criterion is how closely the decompressed image resembles the original. Often, a mathematical analysis is very difficult to perform, so we use the latter criteria. The compression ration is the ratio of the number of bits in the original data to the number of bits in the compressed image. For instance, if a data file contains 500,000 bytes and the compressed data contains 100,000 bytes, the compression ratio is 5:1.

In this section, we will consider a compression technique, known as Huffman compression, that relies on counting the number of occurrences of each 8-bit byte in the data and generating a sequence of optimal binary codes called prefix codes. Algorithms that perform optimization normally execute a series of steps requiring choices. At each step, a greedy algorithm always looks at the data on hand and makes the choice that looks best on the basic of the local data. It hopes that the locally optimal choices will lead to an optimal solution to the whole problem. The Huffman algorithm is an example of a greedy algorithm.

Huffman compression is a popular and effective technique for data compression. The technique tends to very effective for textfiles,in which compression ratios of at least 1.8 (at least 45% reduction) are common. The technique is successful for binary files, but the savings are generally not as good. The algorithm generates a table that contains the frequency of occurrence of each byte in the file. Using these frequencies, the algorithm assigns each byte a string of bits known as its bit code and writes the bit code to the compressed image in place of the original byte. It is hoped that the sum total of the bit codes is smaller than the original file.

Suppose that a files contains only the ASCII (8-bits) characther 'a' through 'f' and that the characters have the frequencies specified in below table. For example, the character 'c' occurs 20,000 times in the file. If we assign each character a fixed-length bit code, we will need 3 bits for each character ('a'=000, 'b'=001, ..., 'f'=101), as shown in below table. If we replace each character by its bit code, the resulting compressed image use a total of :

(16(3) + 4(3) + 8(3) + 6(3) + 20(3) + 3(3)) x 1000 = 171,000 bits

Computers store data in 8-bit bytes, so the image will have a size of 21,375 bytes. The original file has a sizeof 57,000 bytes, so the compression ratio is 2.67 (Saving of 62.5%)

The goal of the Huffman algorithm is to create an "optimal" binary tree that represents the bit codes. We will explain the meaning of optimal when we develop the algorithm. For now, let us explore a tree that illustrates the issues. In the example, each leaf node contains a byte and its frequency of occurrence. Each internal node contains the sum of the frequencies of its children. Starting at the root, a left child designates a bit of 0 and a right child designates a bit of 1. The tree grows until the branches create all bit codes as leaf nodes. The tree structure for the bit codes in upper table is as follows :

Note that, in the tree, no bit code is also a prefix for another bit code. This is guaranteed, because a byte occurs only in a leaf node. Such codes are calledprefix codes. In a tree, each non-leaf node contains a frequency count. This becomes part of our strategy to create a optimal tree. Another issue of optimality involves the right subtree of the root. There are two codes beginning with 10 but no codes beginning with 11. The reason is that the tree is not a full binary tree. A full binary tree is one in which interior node has two children. By converting the tree to a full tree, we can generate better bit codes for 'a'-'f'. If we replace the 23 on level by its subtree, the following full tree result :

The bit codes for 'e' and 'f' are both 1 bit shorter, so the compressed file will now have :

(16(3) + 4(3) + 8(3) + 6(3) + 20(2) + 3(2)) x 1000 = 148,000 bits

which corresponds to a compression ratio of 3:1.

Now that we have seen a tree of bit codes, we can begin to understand the design of a file-compression algorithm. Begin by reading the file and determining the frequencies of the bytes in the files. Then use the frequencies to build a tree of prefix codes that determines unique bit codes for each byte. Write the tree to the compressed file and then reread the source file. For each byte in the second pass, write its corresponding bit code to the compressed file.

To decompress a file, decode the stream of bits by using the prefix codes. To determine the first byte of the original file, start at the root of the tree and read a bit from the compressed file. If the bit is 0, move to the left child; if the bit is 1, move to the right. Read the next bit and move again. Continue until you encounter a leaf node, so there is no ambiguity. Continue in this fashion until reaching the end of the compressed file.

The overriding issue in using bit codes for compression is to choose an optimal tree. It can be shown that the optimal bit codes for a file are always represented by a full tree. Any full tree with n leaf nodes has exactly n-1 internal nodes, and so it has a total of n+(n-1)=2n-1 nodes. Thus, if a file contains nunique bytes, and we compress it by replacing its bytes by bit codes, the corresponding tree will have 2n-1 nodes. There is a simple formula for the number of bits in the compressed image. For each byte b in the original file, let f(b) be the frequency of the byte and d(b) be the depth of the leaf node containing b. The depth of the node is also the number of bits in the bit code for b. We refer to the number of bits necessary to compress the file as the cost of the tree, which we specify by the following relation :

It can also be shown that a Huffman tree generates optimal prefix codes. That is, among all tree representing prefix codes, a Huffman tree gives the minimum cost. The Huffman algorithm constructs the tree so that the most frequently occurring bytes correspond to leaf nodes near the top of the tree, and the least frequently occurring bytes occur at the bottom. In this way, frequently occurring bytes have short bit codes and less frequently occurring bytes have long bit codes. As we describe the algorithm, we illustrate each step with the data in previous table.

Building a Huffman Tree :

For each of the n bytes in a file, assign the byte and its frequency to a tree node, and insert the node into a minimum priority queue ordered by frequency.

Remove two elements, x and y, from the priority queue, and attach them as children of a node whose frequency is the sum of the frequencies of its children. It does not matter which node is the left child and which node is the right node. The byte value in an interior node is not used. Insert the resulting node into the priority queue.

In a loop, perform this action n-1 times. Each loop iteration creates one of the n-1 internal nodes of the tree. Because each internal node has two children, the resulting tree is full. Below figure shows each step in the construction of a Huffman tree for the sample file :

For the Huffman tree, the compressed file contains :

(16(2) + 4(4) + 8(2) + 6(3) + 20(2) + 3(4)) * 1000 = 134,000 bits

which corresponds to a compression ratio of 3.4.

Building a Huffman tree for file compression is simple in principle. However, its implementation involves a great many details. The implementation uses a variety of tools we have developed, including the HeapPQueue class based on the heap, the bit array, and inheritance. Because the compression algorithm writes binary data to disk and the decompression algorithm reads binary data from disk, we use binary files.

Implementing Huffman Compression :

The HCompress class is designed for use in compressing a file with a file name as an argument. It opens the source file and creates a binary output file by adding the extension .huf to the name. The compression process includes accompanying process messages that are output to console. To use the same HCompress object for additional files, call setFile() with a new source file name. The public method compress() executes the compression steps. To understand some of the internal parameters of the compression process, we provide the methods compressionRatio() and size(). The latter gives the number of nodes in the Huffman tree.

To simply all process and demonstration, we made a static method generateData() to generate fake test data. Afterward, we can use the fake data to be used in the method compress() for demonstration. Also we store the data in HNode class which will be used for generate the binary tree to calculate the bit codes. The implementation is as below :

Output :

This message was edited 12 times. Last update was at 21/03/2011 14:45:20

沒有留言:

張貼留言